Why logging system collects container logs from specific directory

本文章介紹了常見 Kubernetes Logging 架構,並解探討了為了使用 Node Agent 架構模式上為何收集 /var/log/containers/ 目錄內的 log 而非其他目錄。

Logging Architecture

根據 Kubernetes Logging Architecture[1] 文件,大致上分為兩類 node-level 及 cluster-level:

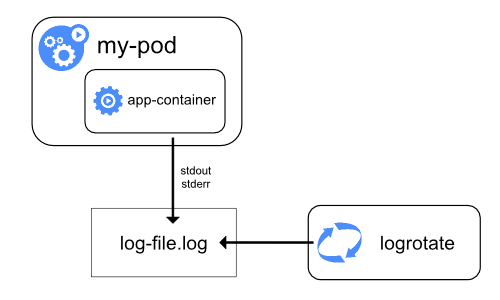

Logging at the node level

node-level 透過 logrotate 命令或是 kubelet containerLogMaxSize 及 containerLogMaxFiles 參數 rotate log。

Cluster-level logging architectures

cluster-level 使用以下三種方式實現:

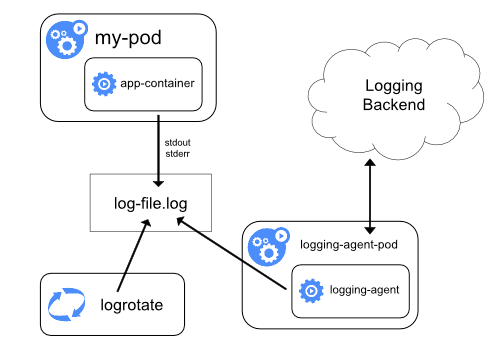

Using a node logging agent

於每一個節點上接部署 Logging Agent 收集 application log 並上傳回 logging 系統。例如:Flutend 或是 Grafana Loki 都是比較接近這種方式,於每一個節點透過 DaemonSet 部署方式,等同有一支 agent process 收集每個節點上的 log 。執得注意的是,倘若 logging system 也是依賴於 Kubernetes 環境,而 Kubernetes 無法正常運作時,logging system 也可能癱瘓,需要注意環境相依性及單點故障。

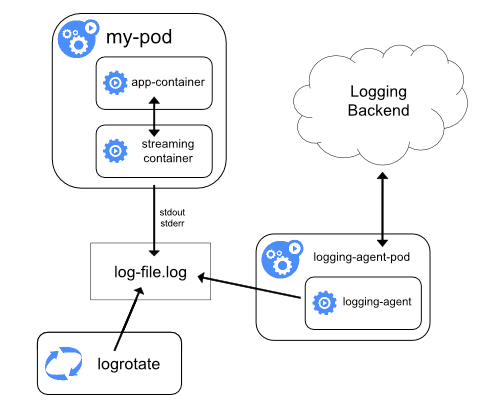

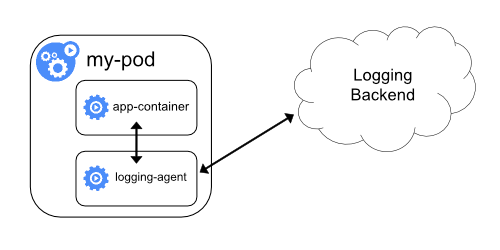

Using a sidecar container with the logging agent

- Streaming sidecar container:一般來說,Container log 將 stdout 及 stderr 輸出於 Container run time 預設目錄,但是有些 application 可能預設並不會輸出至 stdout 或 stderr。如部分老舊系統有固定產出的目錄位置,則可以透過 sidecar container 搭配使用 tail 命令定期讀取該目錄位置,並由 logging agent 收集 log 回 logging system。

- Sidecar container with a logging agent :直接透過 sidecar container 收集 application 回 logging system。例如:倘若僅需要收集特殊 application log,可以自行撰寫 script 或是 HTTP API 將 log 更新至 logging system。

- Exposing logs directly from the application:直接將透過 logging system 收集 log。

Why we collects logs from /var/log/containers/ directory

那到底這些 Logging system 本質上是怎麼於節點上採集 application log 呢?

以下以 EKS 官方 CloudWatch Agent for Container Insights Kubernetes Monitoring 解決方案為例,提供了 Flutend 及 Fluent Bit 兩種 Logging system。

有趣的是,這兩種不同的 Logging system 卻同時收集了相同 /var/log/containers/*.log 目錄作為 application log,預設 config 分別如下方所示:

Flutend

預設 Flutend containers.conf 收集 /var/log/containers/*.log

containers.conf: |

<source>

@type tail

@id in_tail_container_logs

@label @containers

path /var/log/containers/*.log

exclude_path ["/var/log/containers/cloudwatch-agent*", "/var/log/containers/fluentd*"]

pos_file /var/log/fluentd-containers.log.pos

tag *

read_from_head true

<parse>

@type json

time_format %Y-%m-%dT%H:%M:%S.%NZ

</parse>

</source>

...

...

Fluent Bit

預設 Fluent Bit application-log.conf 收集 /var/log/containers/*.log

application-log.conf: |

[INPUT]

Name tail

Tag application.*

Exclude_Path /var/log/containers/cloudwatch-agent*, /var/log/containers/fluent-bit*, /var/log/containers/aws-node*, /var/log/containers/kube-proxy*

Path /var/log/containers/*.log

Docker_Mode On

Docker_Mode_Flush 5

Docker_Mode_Parser container_firstline

Parser docker

DB /var/fluent-bit/state/flb_container.db

Mem_Buf_Limit 50MB

Skip_Long_Lines On

Refresh_Interval 10

Rotate_Wait 30

storage.type filesystem

Read_from_Head ${READ_FROM_HEAD}

...難道 Kubernetes cluster 本就會將 container log 輸出至 /var/log/containers 目錄嗎?根據 Kubernetes Proposals,明確定義 Pod 是基於 cluster-level 收集 log 目的導向,都會 kubelet 透過 soft link 方式關聯 Container Runtime(如 Docker)至 /var/log/containers 目錄,並且依照 /var/log/containers/<pod_name>_<pod_namespace>_<container_name>-<container_id>.log 格式作為 log 名稱。

In a production cluster, logs are usually collected, aggregated, and shipped to a remote store where advanced analysis/search/archiving functions are supported. In kubernetes, the default cluster-addons includes a per-node log collection daemon, `fluentd`. To facilitate the log collection, kubelet creates symbolic links to all the docker containers logs under `/var/log/containers` with pod and container metadata embedded in the filename.

/var/log/containers/<pod_name>_<pod_namespace>_<container_name>-<container_id>.log`

The fluentd daemon watches the `/var/log/containers/` directory and extract the metadata associated with the log from the path. Note that this integration requires kubelet to know where the container runtime stores the logs, and will not be directly applicable to CRI.

附註:Kubernetes Proposals 從 2021 4 月起已經將相關 Proposal 遷移至 kubernetes/enhancements GitHub。

Summary

故現況 Kubernetes - Cluster-level Using a node logging agent 架構,皆是收集 /var/log/contianers 目錄 log 作為一個 Kubernetes 收集 container application log 的共用規範。